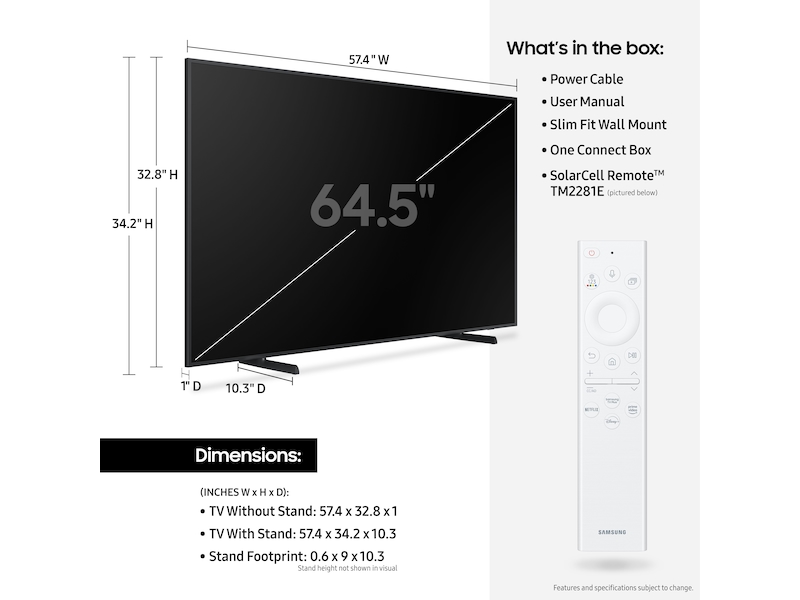

Amazon.com: SAMSUNG 50-Inch Class Frame Series - 4K Quantum HDR Smart TV with Alexa Built-in (QN50LS03AAFXZA, 2021 Model) : Electronics

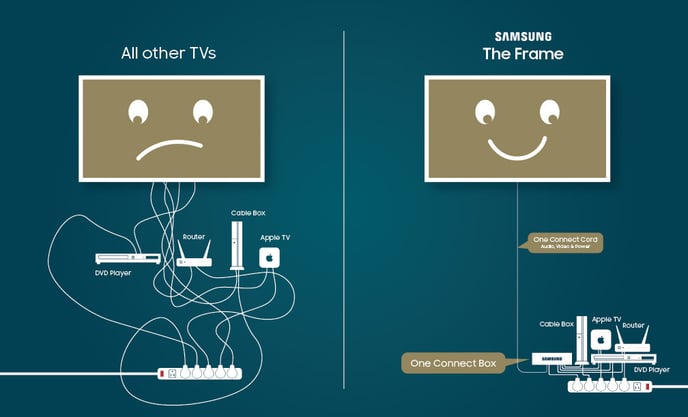

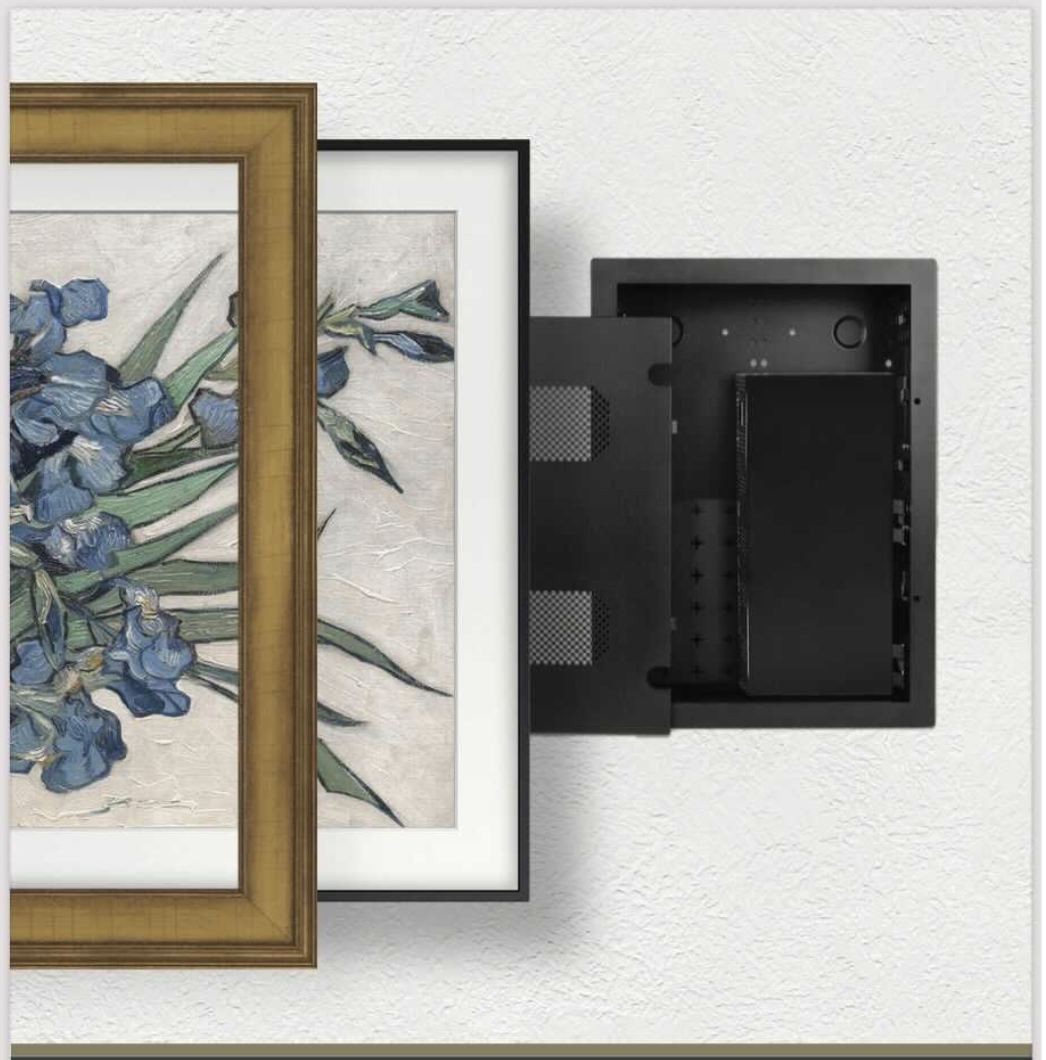

Amazon.com: SAMSUNG 65" Class The Frame QLED Smart 4K UHD TV (2019) - Works with Alexa : Electronics

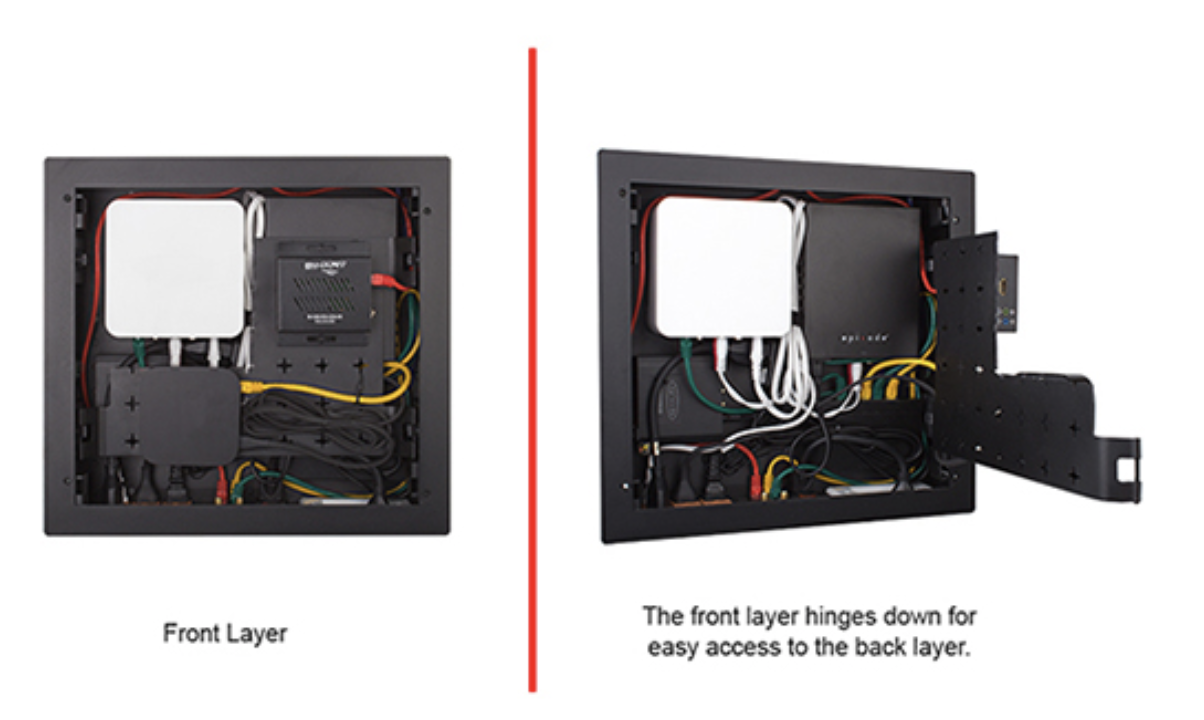

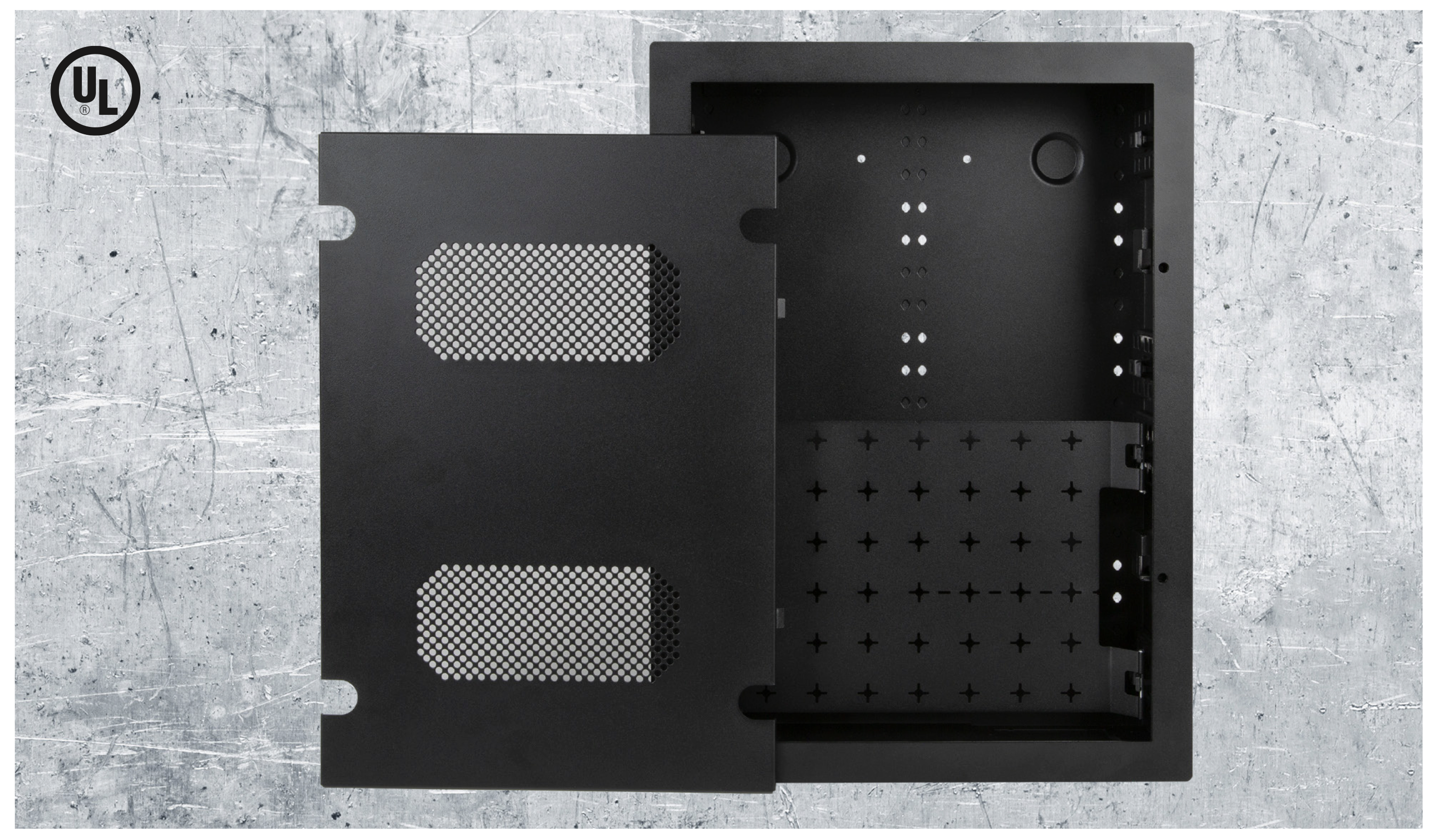

5m One Connect In-Wall Cable for QLED & Frame TVs (2019) Television & Home Theater Accessories - VG-SOCR86U/ZA | Samsung US