M42 Sega a tazza da 200 mm Punta da trapano per taglierina per adattatore per punte da trapano per fresa per fori Tubo di ferro in alluminio 200mm : Amazon.it: Fai da

WEKOW 1PCS 20-200mm M42 HSS Fresa Per Sega A Tazza Aurora Punta In Metallo Verde Per Trapano Per Legno In Ferro In Alluminio (Aurora Green,20MM) : Amazon.it: Fai da te

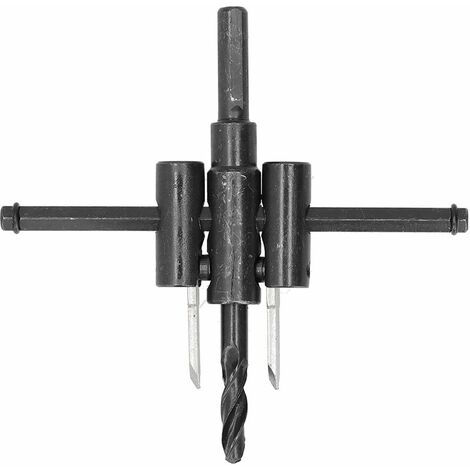

M42 Sega a tazza da 200 mm Punta da trapano per taglierina per adattatore per punte da trapano per fresa per fori Tubo di ferro in alluminio 200mm : Amazon.it: Fai da

M42 Sega a tazza da 200 mm Punta da trapano per taglierina per adattatore per punte da trapano per fresa per fori Tubo di ferro in alluminio 200mm : Amazon.it: Fai da

SHEENO 20 Pezzi Sega a Tazza,19-127 mm Fresa a Tazza,in Acciaio al Carbonio per Trapano, per Legno, Cartongesso : Amazon.it: Fai da te